I first wrote about this subject back in June 2012 (see: http://beyondrelational.com/modules/2/blogs/76/posts/15290/index-fragmentation-in-sql-azure.aspx) after several conversations with Microsoft about it, and particular concerns over fragmentation in the primary database compared to the secondaries (that’s right, the replicated secondary databases can have different fragmentation levels to the primary). I was convinced that defragging the indexes would help with this scenario, but bowed down to Microsoft’s advice and wrote the post accordingly.

As with anything though, advice and thoughts can change over time. Whilst the advice given in the linked post is still mostly correct – there are still no guarantees on enhancing performance through defragging indexes - there are occasions where an index defrag is beneficial. Take the scenario mentioned above for example. Say you have a lot of data, multiple GBs in fact, with a decent number of indexes present. Your data is sharded, for performance and scalability reasons, meaning you are regularly using a non-sequential ID as the Primary Key, such as a GUID.

Interlude: The reason behind using a GUID? Well, scaling out using shards should also allow scaling in, i.e. merging databases. If your Primary Key for a table is not unique across all shards you’ll get Primary Key violations on the merge (cue heated discussion about sequential IDs, secondary lookups, COMBs, blah, blah).

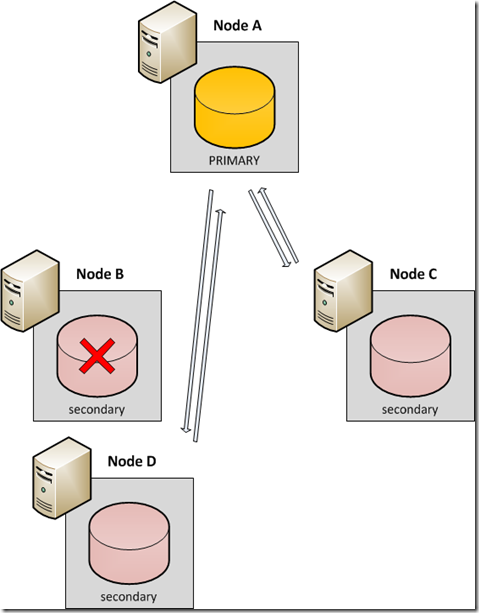

FILLFACTOR is fixed at 0 (100%) in WASD, meaning as you insert records you naturally incur page splits and fragmentation in your indexes. This means under-filled pages and wasted space, bloating your overall index sizes. Not only does this cost you more money, but it also has another peculiar side effect – that of mismatches in fragmentation levels between replicas. In a multi-tenant architecture, you have no control over the physical nodes on which your databases reside, nor their secondaries, and the Azure fabric controller performs lots of black box operations unbeknownst to us such as moving databases between physical nodes when necessary. Continuing our example, imagine you have the following physical setup:

Now suppose Node B becomes extremely busy due to other tenants residing there. Your replication is struggling to complete slowing down the transactions in the primary, and the fabric controller ultimately decides the database needs to be moved to a different node:

The process of moving and rebuilding the database on a different node can trigger a rebuild of the indexes. The end result? You have replicas with different fragmentation levels. At this point, a failover to the relocated secondary will cause a jump in space used. The more indexes and fragmentation you have, the larger the jump. Initially, the jump will be downwards – that’s cool right? Works out cheaper, and fragmentation is fixed. But how about if we fail back? Yup, our space used in the database suddenly increases (assuming the failover is to the former primary database, and that nothing has triggered a rebuild of it in the meantime).

No doubt you’re keeping track of how full your databases are though, so there’s no way the jump would take you up to your maximum database size, right? That’s ok then.

As I alluded to earlier, defragging your indexes regularly can mitigate against this scenario. But CAUTION – the same rules and limitations apply. There is limited transaction log usage, tempdb, and it’s a large physical operation so there is increased risk of throttling. Rebuilding ONLINE reduces the transaction size, but with large indexes (multi-GB) you still run the risk of it failing. My advice – consider a nice old-fashioned technique called Partitioned Views (see http://technet.microsoft.com/en-us/library/ms190019%28v=sql.105%29.aspx). If no logical partition exists on a table, create one using a hash function. It increases the administration overhead a little, but allows you to reduce your index sizes by splitting the data into separate partitions.

Rebuilding indexes in WASD? Easy peasy.

No comments:

Post a Comment