In my last post (http://sqltuna.blogspot.co.uk/2013/10/index-fragmentation-in-wasd.html) I ended with a reference for using Partitioned Views to reduce index sizes and allow defragmentation processes to run successfully. This is because they allow each member table to have their indexes rebuilt individually meaning smaller datasets and smaller transactions. My claim of it being “easy peasy” is possibly far fetched, so I felt a follow-up post was needed. Although Partitioned Views is not a new concept in SQL Server, WASD is a different platform, and I thought it may prove useful to focus on how to partition your data in this environment.

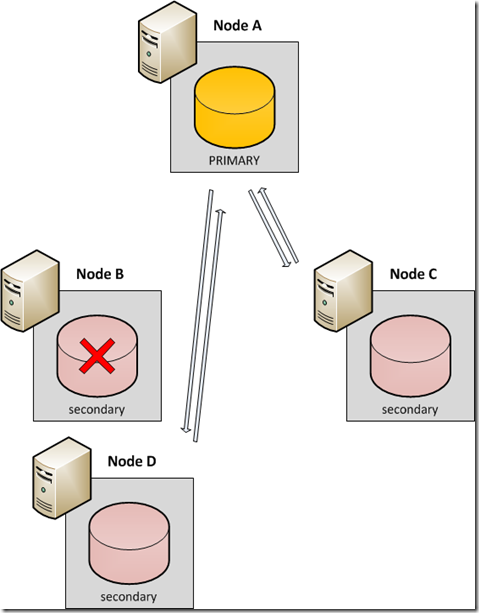

First of all, as the observant reader, you have no doubt guessed that Partitioned Views are indeed supported on WASD. You may not know however that Partitioned Tables & Indexes are not supported. In the context of rebuilding indexes that’s not an issue, as partitioned tables or indexes cannot have individual partitions rebuilt online, unless you’re a crazy fool running SQL Server 2014 CTP1+ in production. Therefore Partitioned Views do us just fine.

So, without getting into a full explanation of a Partitioned View, we essentially need to find or create one or more columns to constrain using CHECKs, keeping the datasets in each member table mutually exclusive.

What do I partition on?

The link above gives a nice, neat example using years and months for sales data. In practice, your data does not always have an obvious partition. In such cases, you need to create one. In WASD you have 2 approaches – calculate in the database, or calculate in the application tier. We don’t have the luxury of CLRs, so any code-related hashing must be moved into the application tier. This is not a bad option, as it moves the processing where there are commonly more CPUs available, and in the case of Azure, more control over the resources available. For more simplistic hashing in the database, this can be done in the form of a computed column or scalar UDF.

TIP: Whichever approach you take, ensure the source columns contain static data – you do not want to be coding data movements between your member tables due to a data update!

Let’s assume you don’t have a natural key for partitioning your data. We need to hash a source column to produce a “partitionable” column. If we consider a member table to be a “bucket” of data, the hashing algorithm must distribute the data as evenly as possible across the buckets. A very simple example would be where an incremental integer PK exists – your partition column could take the modulus of the PK against the number of buckets, e.g. for 10 buckets, use PK % 10. This will give you an even distribution for the values 0 to 9. BEWARE – if your table contains such a key, which is an IDENTITY field, this is not the solution for you. As soon as you split the data into member tables, you lose the ability to generate an incremental ID across the entire set, as each IDENTITY value will relate to the individual member table. If it’s not an IDENTITY field, test your distribution across the number of desired partitions to see if this approach is appropriate.

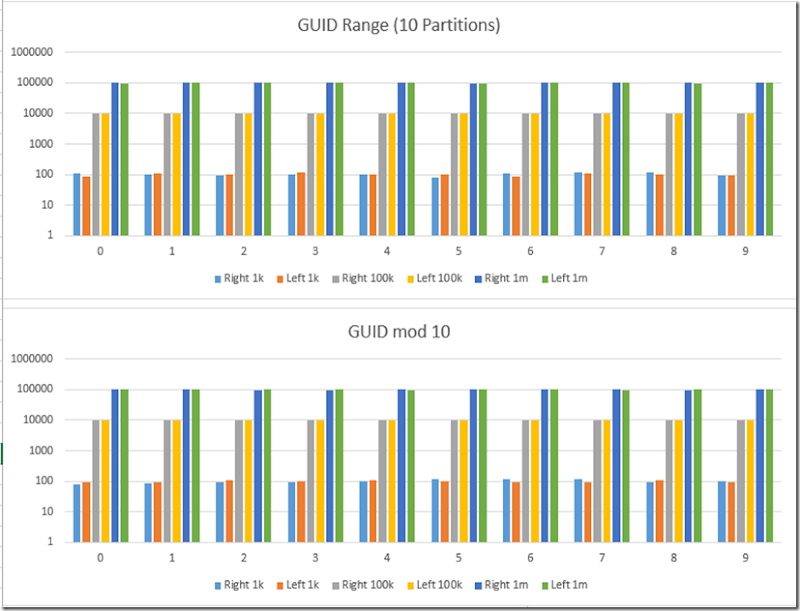

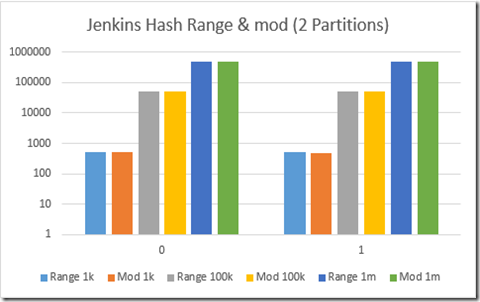

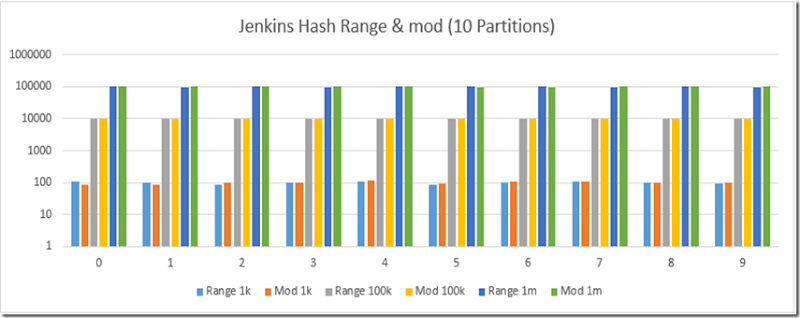

Another point is we’re working in WASD here. And this solution is being considered due to large data volumes and index sizes. The likelihood is your data is sharded across multiple databases to increase throughput, and to allow for scalability you have designed your tables without an incremental key to remove PK clashes when merging datasets. You may already have a hashed ID that you are using for sharding, or perhaps you’re using GUIDs or COMBs. To explore the distribution of these various options I ran a comparison based on the following hashing options, where n is the number of partitions (or member tables):

- The rightmost 4 bytes of a GUID, converted to an integer, split into n equal ranges

- The rightmost 4 bytes of a GUID, converted to an integer (absolute), mod n

- The leftmost 4 bytes of a GUID or COMB, converted to an integer, split into n equal ranges

- The leftmost 4 bytes of a GUID or COMB, converted to an integer (absolute), mod n

- The hash of a GUID using an implementation of the Jenkins hash, split into n equal ranges

- The hash of a GUID using an implementation of the Jenkins hash, mod n

This list is clearly not exhaustive, but is a very good start to a simple hashing mechanism for creating a Partitioned View. I excluded using the rightmost part of a COMB due to the way it is constructed, i.e. using the system date & time. It is clear even without testing that the results would be heavily dependent on the time records were inserted, rather than based on a consistent algorithm – I wanted to avoid this approach. The Jenkins hash is a simple, yet effective, hashing algorithm that I have seen used in a large-scale sharding architecture. The implementation I am using for the tests is in C#, and produces a number in the positive BIGINT range from a String input. It is highly effective at producing an even distribution across the positive BIGINT range, even from the smallest change to the input value, and with very little chance of duplicates. I have personally tested this algorithm using 2.5 billion usernames without any collisions (!). Yes, it needs to be run in the application tier, but is a worthwhile consideration for many architectural partitioning concerns. Here is the code (courtesy of a developer colleague of mine):

{

ulong hash = 0;

foreach (byte b in System.Text.Encoding.Unicode.GetBytes(input.ToLower()))

{

hash += b;

hash += (hash << 10);

hash ^= (hash >> 6);

}

hash += (hash << 3);

hash ^= (hash >> 11);

hash += (hash << 15);

return (long)(hash % long.MaxValue);

}

The comparison was performed in a single table, with results shown across 1k, 100k and 1m rows for 2, 10 and 20 partitions. I am cheating a little for these tests and running it all locally so I can make use of a CLR function for the Jenkins hash. It was that or process each row one at a time in WASD… (from an application perspective this is not an issue, as rows tend to be dealt with individually). Here is the script I used for creating and populating all the hash values:

DROP TABLE dbo.DistributionTest;

GO

-- I am using computed columns to do the work here and populate partition numbers based on my criteria

CREATE TABLE dbo.DistributionTest

(

ID int IDENTITY(1,1) NOT NULL PRIMARY KEY

,baseGUID uniqueidentifier NOT NULL DEFAULT NEWID()

,right4range2 AS (CASE WHEN CONVERT(int,CONVERT(varbinary(4),RIGHT(CONVERT(char(36),baseGUID),8),2)) < 0 THEN 0 ELSE 1 END) PERSISTED

,right4range10 AS (FLOOR((CONVERT(bigint,CONVERT(int,CONVERT(varbinary(4),RIGHT(CONVERT(char(36),baseGUID),8),2)))+2147483648)/429496730)) PERSISTED

,right4range20 AS (FLOOR((CONVERT(bigint,CONVERT(int,CONVERT(varbinary(4),RIGHT(CONVERT(char(36),baseGUID),8),2)))+2147483648)/214748365)) PERSISTED

,right4mod2 AS (ABS((CONVERT(int,CONVERT(varbinary(4),RIGHT(CONVERT(char(36),baseGUID),8),2)))%2)) PERSISTED

,right4mod10 AS (ABS((CONVERT(int,CONVERT(varbinary(4),RIGHT(CONVERT(char(36),baseGUID),8),2)))%10)) PERSISTED

,right4mod20 AS (ABS((CONVERT(int,CONVERT(varbinary(4),RIGHT(CONVERT(char(36),baseGUID),8),2)))%20)) PERSISTED

,left4range2 AS (CASE WHEN CONVERT(int,CONVERT(varbinary(4),LEFT(CONVERT(char(36),baseGUID),8),2)) < 0 THEN 0 ELSE 1 END) PERSISTED

,left4range10 AS (FLOOR((CONVERT(bigint,CONVERT(int,CONVERT(varbinary(4),LEFT(CONVERT(char(36),baseGUID),8),2)))+2147483648)/429496730)) PERSISTED

,left4range20 AS (FLOOR((CONVERT(bigint,CONVERT(int,CONVERT(varbinary(4),LEFT(CONVERT(char(36),baseGUID),8),2)))+2147483648)/214748365)) PERSISTED

,left4mod2 AS (ABS((CONVERT(int,CONVERT(varbinary(4),LEFT(CONVERT(char(36),baseGUID),8),2)))%2)) PERSISTED

,left4mod10 AS (ABS((CONVERT(int,CONVERT(varbinary(4),LEFT(CONVERT(char(36),baseGUID),8),2)))%10)) PERSISTED

,left4mod20 AS (ABS((CONVERT(int,CONVERT(varbinary(4),LEFT(CONVERT(char(36),baseGUID),8),2)))%20)) PERSISTED

,jenkinsrange bigint NULL

,jenkinsmod2 tinyint NULL

,jenkinsmod10 tinyint NULL

,jenkinsmod20 tinyint NULL

);

GO

INSERT INTO dbo.DistributionTest DEFAULT VALUES;

-- insert 1m rows - ID can be used to find distributions up to 1k and 100k

GO 1000000

-- use CLR to apply Jenkins hash

UPDATE dbo.DistributionTest

SET jenkinsrange = dbo.LongHash(CONVERT(char(36),baseGUID));

GO

UPDATE dbo.DistributionTest

SET jenkinsmod2 = jenkinsrange%2

,jenkinsmod10 = jenkinsrange%10

,jenkinsmod20 = jenkinsrange%20;

GO

SELECT TOP 10 *

FROM dbo.DistributionTest;

GO

RESULTS:

Conclusion

That’s a lot of data to take in! Essentially though, all approaches produce an acceptable distribution across the partitions, even for low data volumes. If you have a unique GUID in your dataset, then any of these approaches will work for you. Consider putting the partitioning logic into the application tier too, as there is often more processing power available than in WASD. No GUID? No worries! Any unique column should work with the Jenkins hash – simply convert to a String first and feed it in. Adding a unique GUID is not exactly difficult either… In fact my WASD preference (in most cases) is to use a GUID as the Primary Key, allowing for scalability through sharding, as well as the basis for a well distributed partitioning key. A quick statement for the anti-GUID clan out there - your Primary Key does not have to be your clustering key. Cluster on an incremental field if fragmentation on insert is a concern. Having your Primary Key as the basis of your partitioning allows the application to generate the partition key and utilise the Partitioned View without (much) additional work. Bear this in mind when designing your solution – if the Primary Key is not your app’s usual entry point then use a column that is.

Finally, if you want your Partitioned View to be updateable then pay attention to the requirements for this (a useful link here: http://technet.microsoft.com/en-us/library/ms187067%28v=sql.105%29.aspx). Avoid computed columns, timestamps and IDENTITY columns etc, and remember to add your partitioning column(s) to your member tables’ Primary Keys.

Partitioned Views in WASD – easy peasy? Armed with a few techniques, not far off I think ![]()