I saw a question on twitter not too long ago regarding page compression. It was posted by Klaus Aschenbrenner (blog|twitter) and read as follows:

What is SQL Server doing with free space on a page (e. g. low Fill Factor) when applying Page Compression? #sqlhelp

Andreas Wolter (blog|twitter) provided an answer Klaus seemed happy with, relating to this page in BOL which states that page compression is only applied once the page is full.

For me, there are still too many unanswered questions here. What constitutes “full”? Does FILLFACTOR influence this? If not, does that mean setting a FILLFACTOR on an index render page compression effectively pointless?

The only way to show what happens is to run through a compression scenario. To start with we need a table, uncompressed of course. On the basis that page level compression always applies row compression until it is “full” we want to make the rows fairly difficult to compress. We also want only a few rows to fill a page to help test this. So we use a 4-byte varbinary ID and a 790-byte varbinary filler. Both columns carry a 2-byte overhead as they are variable length, as well as an extra 2 bytes holding the count of variable length columns. We also have the various row overheads, in our case 7 bytes, so in total our record is 4+2+790+2+2+7 = 807 bytes (see a great description of the row structure here: http://www.practicalsqldba.com/2012/08/sql-serverunderstanding-data-record.html). Varbinary is unaffected by row compression, so in theory this should allow us 10 records per page.

Tip: a data page is 8192 bytes. 96 bytes are used for the header, plus a 2-byte overhead for each row offset at the foot of the page. Therefore for 10 rows we are left with 8192-96-(10*2) = 8076 bytes to fill. That’s why I chose a record length of 807 bytes…

Let’s create the table and add 10 records:

if object_id('dbo.CompressionTest') is not null

drop table dbo.CompressionTest;

go

-- create table uncompressed

create table dbo.CompressionTest

(

ID varbinary(4) not null

,Filler varbinary(790) not null

,constraint pk_CompressionTest_ID primary key clustered (ID)

);

go

-- add 10 rows

insert into dbo.CompressionTest

(ID, Filler)

select 0x00000001, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000002, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000003, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000004, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000005, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000006, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000007, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000008, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000009, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x0000000A, cast(replicate(0x01,790) as varbinary(790));

go

Let’s make sure we only have 1 data page:

set @db = db_name();

dbcc ind(@db,'dbo.CompressionTest',1);

go

Results:

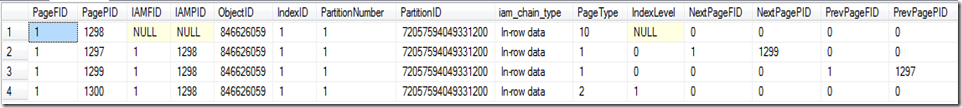

You can see that the highlighted row has a PageType of 1, which is a data page. We only have 1 of them, ID 1297. Let’s have a look at the page header:

set @db = db_name();

dbcc traceon(3604);

dbcc page (@db,1,1297,0);

go

PAGE: (1:1297)

BUFFER:

BUF @0x000000037C2A2100

bpage = 0x000000036F0D4000 bhash = 0x0000000000000000 bpageno = (1:1297)

bdbid = 5 breferences = 0 bcputicks = 0

bsampleCount = 0 bUse1 = 15638 bstat = 0x10b

blog = 0x215acccc bnext = 0x0000000000000000

PAGE HEADER:

Page @0x000000036F0D4000

m_pageId = (1:1297) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x0 m_level = 0 m_flagBits = 0x8000

m_objId (AllocUnitId.idObj) = 276 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594056015872

Metadata: PartitionId = 72057594048675840 Metadata: IndexId = 1

Metadata: ObjectId = 622625261 m_prevPage = (0:0) m_nextPage = (0:0)

pminlen = 4 m_slotCnt = 10 m_freeCnt = 6

m_freeData = 8166 m_reservedCnt = 0 m_lsn = (544:212:31)

m_xactReserved = 0 m_xdesId = (0:0) m_ghostRecCnt = 0

m_tornBits = 0 DB Frag ID = 1

Allocation Status

GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED

PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED

ML (1:7) = NOT MIN_LOGGED

I have highlighted a couple of key header attributes above. Firstly, m_slotCnt confirms we have 10 records on the page. Secondly, m_freeCnt shows we have 6 bytes remaining – this fits perfectly with our calculation above.

So if we add one more record then check again, we should see another page added:

(ID, Filler)

select 0x0000000B, cast(replicate(0x01,790) as varbinary(790));

go

declare @db sysname;

set @db = db_name();

dbcc ind(@db,'dbo.CompressionTest',1);

go

And that’s it confirmed. Page 1300 has been added, and also an index page (PageType 2) which is now needed due to there being more than one data page.

So, to the nitty gritty. Let’s re-run the steps above but with page compression turned on:

if object_id('dbo.CompressionTest') is not null

drop table dbo.CompressionTest;

go

-- create table uncompressed

create table dbo.CompressionTest

(

ID varbinary(4) not null

,Filler varbinary(790) not null

,constraint pk_CompressionTest_ID primary key clustered (ID)

) with (data_compression = page);

go

-- add 10 rows

insert into dbo.CompressionTest

(ID, Filler)

select 0x00000001, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000002, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000003, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000004, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000005, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000006, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000007, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000008, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x00000009, cast(replicate(0x01,790) as varbinary(790)) union all

select 0x0000000A, cast(replicate(0x01,790) as varbinary(790));

go

declare @db sysname;

set @db = db_name();

dbcc ind(@db,'dbo.CompressionTest',1);

go

I’ve highlighted the only difference, and also included the DBCC IND step. The output is as we expect with only one data page created:

So what about the page header?

PAGE: (1:1297)

BUFFER:

BUF @0x000000037C2A2100

bpage = 0x000000036F0D4000 bhash = 0x0000000000000000 bpageno = (1:1297)

bdbid = 5 breferences = 0 bcputicks = 0

bsampleCount = 0 bUse1 = 17272 bstat = 0xb

blog = 0xcccc7acc bnext = 0x0000000000000000

PAGE HEADER:

Page @0x000000036F0D4000

m_pageId = (1:1297) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x0 m_level = 0 m_flagBits = 0x8000

m_objId (AllocUnitId.idObj) = 278 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594056146944

Metadata: PartitionId = 72057594048806912 Metadata: IndexId = 1

Metadata: ObjectId = 686625489 m_prevPage = (0:0) m_nextPage = (0:0)

pminlen = 3 m_slotCnt = 10 m_freeCnt = 56

m_freeData = 8116 m_reservedCnt = 0 m_lsn = (544:379:31)

m_xactReserved = 0 m_xdesId = (0:0) m_ghostRecCnt = 0

m_tornBits = 0 DB Frag ID = 1

Allocation Status

GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED

PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED

ML (1:7) = NOT MIN_LOGGED

I’ve highlighted the same attributes. Notice that for the same number of records, we have 50 extra bytes due to row compression.

Now, as per BOL if we add one more record the page compression should kick in:

(ID, Filler)

select 0x0000000B, cast(replicate(0x01,790) as varbinary(790));

go

declare @db sysname;

set @db = db_name();

dbcc ind(@db,'dbo.CompressionTest',1);

go

Success, we still only have one data page! It’s worth noting at this point why I used a consistent value in my Filler column – if the values were unique we wouldn’t get any page compression, but by repeating the value across rows we see the compression benefits.

Again, let’s have a look at the page header:

PAGE: (1:1297)

BUFFER:

BUF @0x000000037C2A2100

bpage = 0x000000036F0D4000 bhash = 0x0000000000000000 bpageno = (1:1297)

bdbid = 5 breferences = 0 bcputicks = 0

bsampleCount = 0 bUse1 = 11930 bstat = 0xb

blog = 0xcc7acccc bnext = 0x0000000000000000

PAGE HEADER:

Page @0x000000036F0D4000

m_pageId = (1:1297) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x80 m_level = 0 m_flagBits = 0x8000

m_objId (AllocUnitId.idObj) = 279 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594056212480

Metadata: PartitionId = 72057594048872448 Metadata: IndexId = 1

Metadata: ObjectId = 718625603 m_prevPage = (0:0) m_nextPage = (0:0)

pminlen = 3 m_slotCnt = 11 m_freeCnt = 7168

m_freeData = 1002 m_reservedCnt = 0 m_lsn = (544:459:39)

m_xactReserved = 0 m_xdesId = (0:0) m_ghostRecCnt = 0

m_tornBits = 0 DB Frag ID = 1

Allocation Status

GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED

PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED

ML (1:7) = NOT MIN_LOGGED

So we have 11 records, as we know, and page compression has left us with 7168 free bytes on the page. Pretty impressive, although it’s obviously data dependent, and remember that I purposely chose row values that would compress well.

How does FILLFACTOR affect this? To test it out I ran the same steps as above but with the FILLFACTOR set to 50, that is, when inserting records through a DDL operation* a new page will be used once the previous page is 50% full.

* with DML operations SQL Server fills every page to 100% regardless of the FILLFACTOR setting. Only on creating a new index from existing data, or REBUILDing an index, does the FILLFACTOR apply. We therefore need to REBUILD an index after inserting data in order to see the effects.

In our case, we insert 6 records without page compression, then REBUILD our clustered index. I’ll not re-post all of the SQL above, merely highlight the changes from here on:

(

....

,constraint pk_CompressionTest_ID primary key clustered (ID)

with (fillfactor = 50)

) with (data_compression = none);

rebuild

go

Looking at DBCC IND we still have only one data page. If you add one more record, then REBUILD the index once again, you’ll see the FILLFACTOR come into play:

PAGE: (1:1297)

BUFFER:

BUF @0x000000037C2A2100

bpage = 0x000000036F0D4000 bhash = 0x0000000000000000 bpageno = (1:1297)

bdbid = 5 breferences = 0 bcputicks = 103

bsampleCount = 1 bUse1 = 16349 bstat = 0x109

blog = 0xccccdc9a bnext = 0x0000000000000000

PAGE HEADER:

Page @0x000000036F0D4000

m_pageId = (1:1297) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x0 m_level = 0 m_flagBits = 0x220

m_objId (AllocUnitId.idObj) = 286 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594056671232

Metadata: PartitionId = 72057594049331200 Metadata: IndexId = 1

Metadata: ObjectId = 846626059 m_prevPage = (0:0) m_nextPage = (1:1299)

pminlen = 4 m_slotCnt = 6 m_freeCnt = 3242

m_freeData = 4938 m_reservedCnt = 0 m_lsn = (545:329:28)

m_xactReserved = 0 m_xdesId = (0:5180845) m_ghostRecCnt = 0

m_tornBits = 196210610 DB Frag ID = 1

Allocation Status

GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED

PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED

ML (1:7) = NOT MIN_LOGGED

The initial 6 records have remained on the first page after the REBUILD, and the 7th record has been moved to the next page.

Let’s try this with page compression turned on. Repeating the steps, here is the final output of DBCC IND and PAGE:

PAGE: (1:1299)

BUFFER:

BUF @0x000000037C22D200

bpage = 0x000000036F822000 bhash = 0x0000000000000000 bpageno = (1:1299)

bdbid = 5 breferences = 0 bcputicks = 0

bsampleCount = 0 bUse1 = 16634 bstat = 0x109

blog = 0x5ab21c9a bnext = 0x0000000000000000

PAGE HEADER:

Page @0x000000036F822000

m_pageId = (1:1299) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x80 m_level = 0 m_flagBits = 0x220

m_objId (AllocUnitId.idObj) = 289 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594056867840

Metadata: PartitionId = 72057594049527808 Metadata: IndexId = 1

Metadata: ObjectId = 910626287 m_prevPage = (0:0) m_nextPage = (0:0)

pminlen = 3 m_slotCnt = 7 m_freeCnt = 7212

m_freeData = 966 m_reservedCnt = 0 m_lsn = (545:422:25)

m_xactReserved = 0 m_xdesId = (0:5180858) m_ghostRecCnt = 0

m_tornBits = 312998929 DB Frag ID = 1

Allocation Status

GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED

PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED

ML (1:7) = NOT MIN_LOGGED

It seems page compression has come into play. SQL Server has used the FILLFACTOR setting on *uncompressed* data to work out what constitutes a “full” page, at which point page compression has been applied and the extra row added to the same page.

But can we have a “double” FILLFACTOR? That is, when the compressed page reaches 50% full will a new page be added? If we insert 5 more records, but this time use *unique* values in the Filler column to stop them compressing:

(ID, Filler)

select 0x00000011, cast(replicate(0x11,790) as varbinary(790)) union all

select 0x00000012, cast(replicate(0x12,790) as varbinary(790)) union all

select 0x00000013, cast(replicate(0x13,790) as varbinary(790)) union all

select 0x00000014, cast(replicate(0x14,790) as varbinary(790)) union all

select 0x00000015, cast(replicate(0x15,790) as varbinary(790));

go

Looking at the page header output, we see 12 records inserted and more than half the page is used. Now running a REBUILD of the index to force the FILLFACTOR setting gives us the following:

PAGE: (1:1299)

BUFFER:

BUF @0x000000037C22D200

bpage = 0x000000036F822000 bhash = 0x0000000000000000 bpageno = (1:1299)

bdbid = 5 breferences = 0 bcputicks = 0

bsampleCount = 0 bUse1 = 18109 bstat = 0x109

blog = 0x1c9adc9a bnext = 0x0000000000000000

PAGE HEADER:

Page @0x000000036F822000

m_pageId = (1:1299) m_headerVersion = 1 m_type = 1

m_typeFlagBits = 0x80 m_level = 0 m_flagBits = 0x220

m_objId (AllocUnitId.idObj) = 301 m_indexId (AllocUnitId.idInd) = 256

Metadata: AllocUnitId = 72057594057654272

Metadata: PartitionId = 72057594050314240 Metadata: IndexId = 1

Metadata: ObjectId = 1006626629 m_prevPage = (0:0) m_nextPage = (1:1325)

pminlen = 3 m_slotCnt = 11 m_freeCnt = 4000

m_freeData = 4170 m_reservedCnt = 0 m_lsn = (546:350:28)

m_xactReserved = 0 m_xdesId = (0:5180912) m_ghostRecCnt = 0

m_tornBits = 1076126332 DB Frag ID = 1

Allocation Status

GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED

PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED

ML (1:7) = NOT MIN_LOGGED

The twelfth record has been moved to a new page, so there it is – proof of what SQL Server considers a “full” page. It uses the FILLFACTOR based on uncompressed data as a trigger for page compression, then applies a FILLFACTOR once page compression is active.

Predicting storage overhead when compression is involved is always tricky, as it varies so much depending on the data types used and the data stored. Using sp_estimate_data_compression_savings is still the best option – from experimentation it seems to account for the FILLFACTOR setting.

As a final note – be aware of your FILLFACTOR setting before REBUILDing an index, compressed or not. You could end up with a much larger data footprint than you expected.

Oh yeah, I ran all of this on SQL 2012 EE SP1. I’d love to know if anyone sees different results on a different version.